The Productivity Paradox Nobody Talks About

Here's a number that should make every business owner pause: 96% of executives expect AI to boost productivity. Meanwhile, 77% of the people actually using these tools say AI has made their jobs harder.

That's not a small gap. That's a canyon.

The Upwork Research Institute surveyed 2,500 people across the US, UK, Australia, and Canada in 2024. They talked to 1,250 C-suite executives, 625 full-time employees, and 625 freelancers. The findings paint a picture of two completely different realities.

Executives see AI as the answer. Workers see it as another thing on their plate. Both are looking at the same tools. So what's going on?

What's Actually Eating Workers' Time?

The Upwork study broke down exactly where the extra work comes from. The numbers tell a clear story.

39% of workers report spending more time reviewing or moderating AI-generated content. That draft the AI wrote? Someone has to check it. That code suggestion? Someone has to verify it won't break production. That customer response? Someone has to make sure it doesn't say something embarrassing.

23% are investing more time learning how to use the tools. Every new AI feature means another tutorial, another workflow change, another set of prompts to figure out.

21% say they're asked to do more as a direct result of AI. The logic goes like this: "AI made you faster, so now you can handle more." Except the first part of that sentence isn't always true.

And here's the kicker: 65% of employees said their employer's demands are overwhelming. AI didn't lighten the load. It shifted it. Instead of doing the work, workers now manage the AI doing the work—while also doing more work because the AI was supposed to make them faster.

Why Do Executives and Employees See Different Realities?

The perception gap is staggering. 37% of employers believe their people are highly skilled with AI tools. Only 17% of employees feel the same way about themselves.

That's a 20-point difference in confidence. Executives think the team has this figured out. The team disagrees.

Nearly half—47%—of employees using AI report they don't know how to achieve the expected productivity gains. They're using the tools. They're clicking the buttons. But they can't figure out how to make it actually save time.

Meanwhile, 85% of company leaders are pushing workers to use AI technology. Just over a third have started mandating certain AI tools.

So you have executives pushing tools that workers don't feel equipped to use, measuring against productivity gains that workers don't know how to achieve. The workers aren't resisting AI. They're drowning in it.

I've watched this pattern play out across dozens of implementations. The disconnect usually comes down to who's measuring what. Executives see adoption rates and feature usage. Workers see their actual calendars.

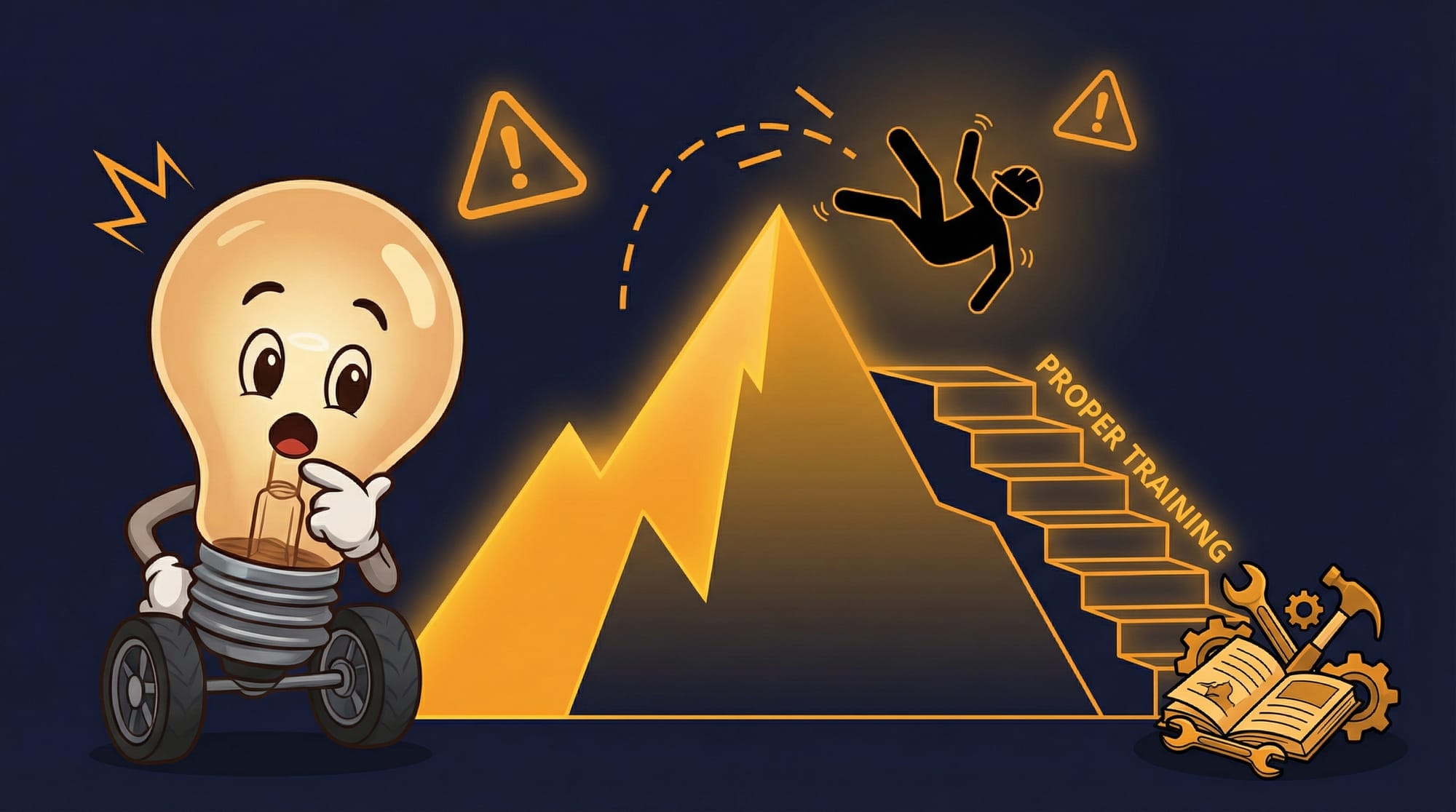

The J-Curve Pattern: Why It Gets Worse Before It Gets Better

Here's something that reframes this whole conversation: technology adoption follows a J-curve pattern. There's an initial productivity dip before any upswing.

The "J" shape comes from the investment required upfront. Learning takes time. Experimentation takes time. Building new workflows takes time. All of that happens before you see returns.

Most companies skip this part in their planning. They announce the AI rollout, set productivity targets, and expect the curve to go up immediately. When it goes down first—which it will—everyone panics.

The Upwork study found that most organizations are currently failing to unlock the full productivity value of AI technology. That word "currently" matters. They're stuck in the bottom of the J.

AI is contributing to employee burnout, according to the same study. When you're in the dip phase but being measured on peak-phase expectations, burnout is inevitable.

How Do You Roll Out AI Without Burning Out Your Team?

Time Magazine's analysis of successful AI programs found a clear pattern. The teams that made it through the J-curve did four things differently.

- **Focus on potential, not productivity.** Successful programs emphasize experimentation over metrics. They ask "what could this do?" before they ask "how much time did it save?" Measuring productivity during the learning phase is like grading someone's first driving lesson on fuel efficiency.

- **Invest in learning time upfront.** This means actual calendar time, not "learn it on your lunch break." Training, support, and space to experiment. The investment pays off later, but it has to happen first.

- **Start at the team level.** Let teams figure out what works for their specific workflows. The accounting team's AI use case is different from marketing's. Mandating the same approach across the board ignores context.

- **Name the transition cost.** Tell people explicitly: "The next 60 days will be harder, not easier. We're investing now for payoff later." When workers know the dip is expected, they don't interpret it as failure.

If you're building out AI implementation strategies, this framing changes everything. You're not deploying a tool. You're asking for an upfront investment that pays dividends later.

What Happens When You Skip the Learning Curve?

Picture this: It's Thursday afternoon. Your CEO just read an article about AI productivity gains. By Monday, there's a company-wide Slack message about the new AI writing tool everyone needs to start using.

No training budget. No workflow guidance. No adjusted deadlines. Just a login and an expectation.

Three weeks later, the marketing team is spending 40% longer on blog posts. They write the draft, run it through the AI, then spend an hour fixing the AI's output, then wonder why they didn't just write it themselves. But they can't—management is tracking tool adoption.

The AI didn't fail. The rollout failed.

In my 30+ years building production systems, I've seen this movie before. Different technology, same pattern. Every new tool requires a learning tax. The question is whether you pay it deliberately or accidentally.

What Are the Hidden Costs of AI Adoption?

The 77% number gets all the headlines. But the real story is in what companies aren't accounting for.

- **Review overhead compounds.** Every AI output needs human review. One person reviewing AI content is manageable. Ten people reviewing AI content across an organization adds up to hundreds of hours monthly. Nobody budgets for this.

- **Skill gaps are invisible until they're not.** That 47% who don't know how to achieve productivity gains? They're still using the tools. They're just using them poorly. Bad AI usage often looks like normal work—until you compare output quality.

- **Expectation inflation happens immediately.** The moment AI is deployed, workload expectations increase. But productivity gains are delayed by the J-curve. You get the extra expectations now and the extra capacity later. The gap is where burnout lives.

- **Tool fatigue is real.** 23% spending more time learning isn't just about one tool. It's about the fourth AI tool this quarter. Each one has different prompts, different quirks, different workflows. The cognitive load accumulates.

- **The best workers get hit hardest.** High performers often become the default AI troubleshooters. They figure out the tool, then spend their time helping everyone else. Their productivity drops so others' can rise.

When you're thinking about AI operations and efficiency, these hidden costs need to be in the spreadsheet. The license fee is the smallest line item.

How Do You Know Your AI Rollout Is Working?

Forget adoption rates. Forget feature usage dashboards. Here's what actually tells you if AI is helping or hurting.

- **Task completion time is dropping, not just task count rising.** More output with the same hours is good. More output with more hours is a red flag.

- **Workers are choosing to use the tools when not required.** Mandated usage tells you about compliance. Voluntary usage tells you about value.

- **Review cycles are getting shorter over time.** Early AI output needs heavy editing. Mature AI usage produces output that needs a light touch. Track the trend.

- **People can articulate specific use cases.** Ask workers: "What's one thing the AI does that saves you real time?" If they can't answer specifically, they haven't found the value yet.

- **Overtime and weekend work is decreasing.** This is the ultimate test. If AI is truly boosting productivity, people should be getting their work done in less time. If overtime is flat or rising, something's wrong.

Frequently Asked Questions

Does this mean AI isn't actually useful for business?

No. The 77% number reflects current rollout practices, not AI's potential. Companies that invest in proper training and realistic timelines see gains. The problem isn't the technology—it's deploying it without accounting for the learning curve. Most organizations are stuck in the J-curve dip, not at the end of the story.

How long does the J-curve dip typically last?

It varies by complexity and support level. For simple tools with good training, 4-8 weeks. For complex AI systems integrated into core workflows, 3-6 months. The key variable is dedicated learning time. Teams that get protected time to experiment recover faster than teams expected to learn while maintaining normal output.

Should we slow down AI adoption based on this data?

Not necessarily slow down—but restructure. The issue isn't speed of adoption. It's adopting without the support infrastructure. Before launching any AI tool, budget for training time, designate internal experts, and explicitly lower productivity expectations for the transition period. Then you can move as fast as you want.

What if my team is already burned out from AI tools?

Pause new rollouts. Audit which tools are actually being used versus mandated. Ask teams directly: which AI features save you time, which create work? Cut the ones creating work. For remaining tools, provide remedial training focused on the specific use cases that matter. Sometimes the fix is less AI, not more.

How do I convince executives that productivity will dip before it rises?

Use the J-curve framing explicitly. Show them the Upwork data: 96% of executives expect gains, but 77% of workers report increased workload. The gap is the J-curve in action. Propose a 90-day pilot with realistic expectations. Measure time-per-task, not just output volume. Let the data make the argument.

What This Means for Your Monday Morning

- **The 77% stat isn't about AI failing—it's about rollouts failing.** Most companies deploy AI tools without budgeting for the learning investment. Workers pay that cost in overtime and stress.

- **The J-curve is real: expect productivity to drop before it rises.** Technology adoption has an upfront cost. Name it explicitly so workers don't interpret the dip as personal failure.

- **There's a 20-point confidence gap between executives and employees.** 37% of leaders think their teams are skilled with AI. Only 17% of workers agree. Close this gap before piling on more tools.

- **Review overhead is the hidden tax nobody budgets for.** 39% of workers spend extra time checking AI output. This time has to come from somewhere.

- **Focus on potential first, productivity second.** Successful AI programs emphasize experimentation during the learning phase. Metrics come after workflows stabilize.

The gap between AI expectations and AI reality isn't permanent. But closing it requires treating adoption as an investment, not a flip of a switch. The companies figuring this out—the ones building real AI strategy—are the ones that will actually see those productivity gains everyone keeps promising.

The other 77%? They're still waiting for the magic to kick in.