A practical, 4-week plan with KPIs to turn a shiny demo into a reliable employee.

You’ve seen the 20-minute YouTube demo: someone “builds an AI agent” before your coffee cools. It looks easy. Almost too easy.

Your instinct is right—real customers don’t talk like demos. The polished clip shows the first 25% (a working prototype). The missing 75% is the hard, crucial part: testing, refining, and adding guardrails so the agent doesn’t frustrate customers, burn leads, or create compliance risk.

This post isn’t about the 25%. It’s the playbook for the 75%—how to turn a slick demo into a reliable employee.

Series: AI Implementation Guides

Who it’s for: Business Owners, Founders, & Team Leads

Outcome: Understand the 75/25 Rule and adopt a practical testing framework that leads to positive ROI.

Start here: To implement a reliable AI strategy that actually books meetings without frustrating leads → Explore AI for Lead Generation

Ready to apply this? Get the 30-day checklist → AI for Lead Generation

In This Article (Table of Contents)

- The 25% Trap: Why That "Easy" AI Demo Is a Minefield

- The 75% Solution: The Professional's Testing Playbook

- Case Study: How Pros Test an AI Receptionist (Reduce Costs)

- Case Study: How Pros Test a "Speed to Lead" Bot (Grow Revenue)

- Your "Production-Ready" AI: A 4-Week Plan

- The Final Verdict: Your AI Is Only as Good as Its Guardrails

- Frequently Asked Questions

- Key Terms You Need to Know

- Related Playbooks

The 25% Trap: Why That "Easy" AI Demo Is a Minefield

Generative AI is non-deterministic: you can’t predict exactly what it will say next. A demo looks flawless because it’s a happy path.

The Breakthrough (That Isn’t)

The 25% demo is a short prompt + a single tool (e.g., a calendar). It works when you test it with ideal phrasing.

The Business Impact

Once real people touch it, weak spots show up fast:

- Frustrated customers: loops, mishears, and awkward phrasing → hang-ups.

- Burned leads: robotic tone → fewer booked meetings (and wasted ad spend).

- Compliance risk: missed disclaimers, incorrect info, over-promising.

Bottom line: an untested demo can cost brand trust and revenue. The value is unlocked in the 75%.

The 75% Solution: The Professional's Testing Playbook

Winning teams don’t rely on “better prompts.” They rely on better process. Pros routinely spend 2 weeks building and 6 weeks testing a production-grade agent.

The Pro Playbook (What to Steal)

Stage 1: Internal Testing

Try to break it. Interruptions, slang, accents, background noise, partial answers.

Stage 2: Automated Simulations

Use AI to test AI. Create caller “personas” (angry, elderly, rushed, heavy accent, emergency) and run scripted scenarios until failure patterns surface.

Stage 3: Live Monitoring

Soft-launch to a small slice of traffic. Review transcripts. Score each conversation. Fix before scaling.

Mindset shift: Don’t ask “Does it work?” Ask “How does it fail—and how do we prevent that failure from reaching customers?”

Case Study: How Pros Test an AI Receptionist (Reduce Costs)

This maps to our Reduce Costs pillar.

The 25% Demo

Agent answers calls, asks a few questions, books to Google Calendar. Looks like a 10-hours/week saver.

The 75% “Pro” Test

“What happens when…”

- “…a caller says ‘I smell gas’ or ‘It’s flooding’?” → Emergency pathway + instant human transfer.

- “…they ask for billing only?” → Smart routing and transfer etiquette.

- “…you’re recording calls?” → Auto-disclaimer every time.

Why it matters: Guardrails prevent legal exposure and angry reviews—so the savings don’t come at the expense of risk.

What to measure (first 30 days) — tuned for home services, clear for any niche:

- First-Contact Resolution (FCR): % of calls resolved without a second touch.

- Accurate Routing Rate: % of calls transferred to the right person on first attempt.

- Compliance Fidelity: % of calls where required disclaimers (recording/AI disclosure) were read correctly.

- Emergency Handling SLA: Median time from “emergency keyword” → human pickup.

Compliance check (2 minutes):

– Always read the “call recorded” + “AI assistant” disclaimer where required

– Route emergencies immediately to a human (keywords: gas leak, flood, electrical burning smell, “urgent”)

– Log transfer attempts + outcomes for audit (ticket or CRM note)

– Review the first 50 transcripts for risky phrasing (refunds, guarantees, medical/legal advice)

Case Study: How Pros Test a "Speed to Lead" Bot (Grow Revenue)

This maps to our Lead Generation pillar.

The 25% Demo

New form fill → AI calls instantly → tries to book a call.

The 75% “Pro” Test

“What happens when…”

- They’re busy: Offer SMS follow-up + one-tap reschedule link.

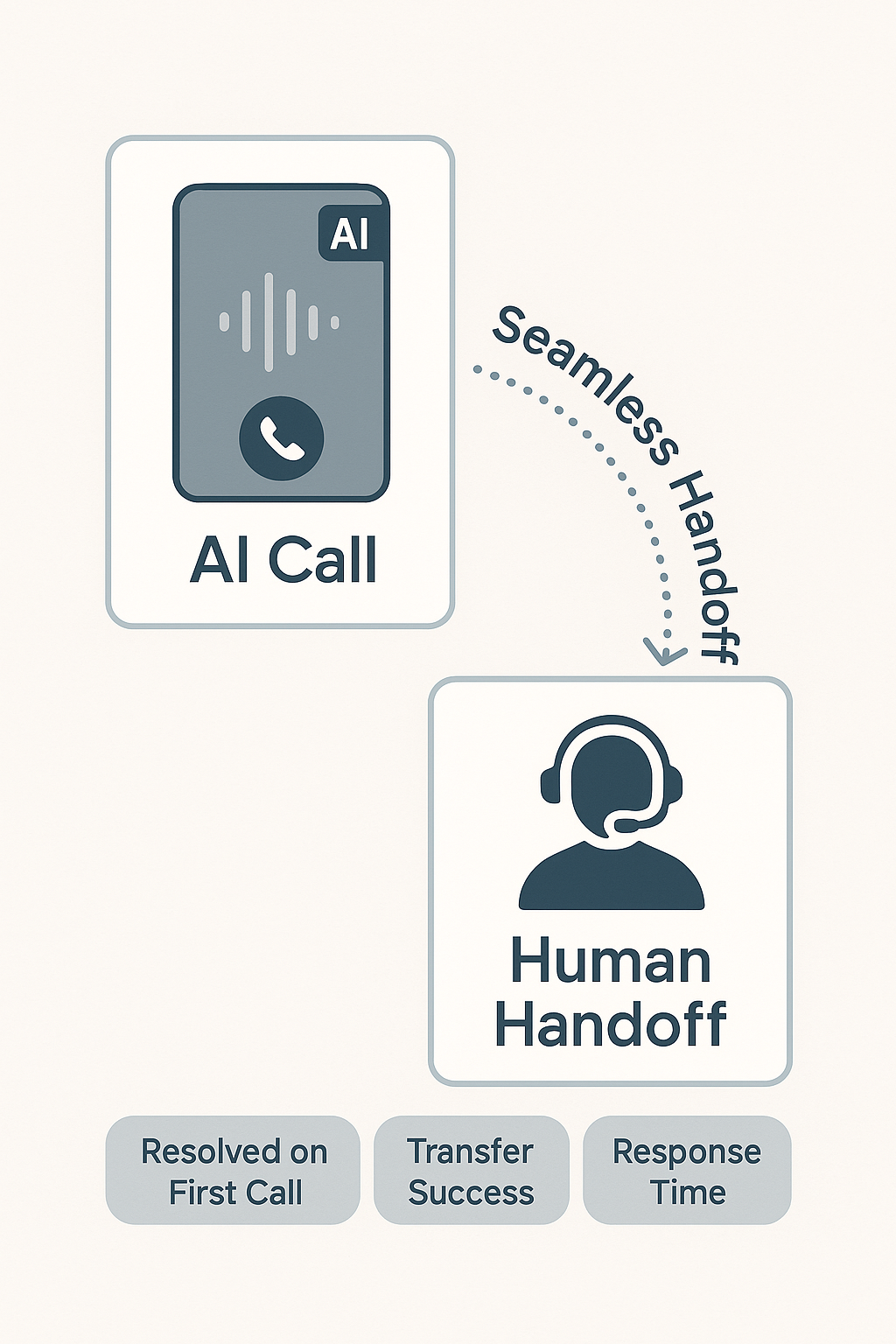

- They’re skeptical (“Are you a robot?”): Empathetic acknowledgement + optional human handoff.

- They’re ‘just looking’: Nurture path (send estimate ranges, FAQs, before/after gallery) rather than forcing a booking.

- They have a strong accent / noisy environment: Slow down, confirm key details, repeat-back etiquette.

Why it matters: With guardrails, you turn more form fills into qualified meetings—without annoying prospects.

What to measure (first 30 days) — tuned for home services, clear for any niche:

- Connect Rate: % of leads that answer the first outreach (call/SMS).

- Qualified Meeting Rate: % of leads who meet criteria and show to appointment.

- Response Time (Speed-to-Lead): Median seconds from form submit → first contact attempt.

- No-Show Reduction: % drop in no-shows after reminders + easy reschedule.

- Cost per Kept Appointment (CPKA): Ad spend / completed appointment.

Tip: Mention “photo upload” or “address verify” in the script for trades—both boost trust and qualification quality.

Your "Production-Ready" AI: A 4-Week Plan

You don’t need a data team—just a clear scoreboard and tight feedback loops.

Week 1 — Build the “25% Demo”

- Pick one process (FAQ, lead qualify).

- Define 1 KPI (e.g., “book 5 qualified meetings”).

- Ship v0.1 that works once end-to-end.

Week 2 — Internal Testing

- 3–5 teammates try to break it.

- Log: prompt → agent reply → ideal reply → fix.

- Apply the first 10–15 fixes.

Week 3 — Simulate & Refine

- Write 10 edge-case scenarios.

- Role-play them; add guardrails (transfer rules, disclaimers, tone cues).

- Re-test until pass.

Week 4 — Go Live & Monitor

- Launch to 10% of traffic.

- Review the first 50 conversations.

- Keep / Kill / Scale based on KPI.

The Final Verdict: Your AI Is Only as Good as Its Guardrails

Your competitive edge this year isn’t model choice—it’s operations maturity. Logging, scoring, edge-case testing, compliance rules, and human handoff are the difference between a clever demo and a dependable employee.

A well-tested B+ agent will beat a chaotic A+ demo every time.

Frequently Asked Questions

What is the “75/25 Rule” for AI?

25% is building a working demo. 75% is testing, refining, and monitoring so it holds up with real customers.

Why is testing AI different from normal software?

Traditional software is deterministic. Generative AI isn’t—it needs scenario testing and guardrails, not just pass/fail unit tests.

What is an “AI persona” for testing?

A scripted caller profile (e.g., angry, elderly, strong accent, emergency) used to pressure-test conversations before you go live.

Do I need a data team to start?

No. Start with one workflow, one KPI, and a 4-week loop: internal testing → edge-case simulation → soft launch → review 50 conversations.

What’s the first KPI to track?

For sales: qualified meeting rate. For service: first-contact resolution. For back-office: hours saved per employee/week.

Key Terms You Need to Know

- The 25% Trap: Believing a 20-minute demo equals production-ready.

- The 75% Solution: The disciplined testing + guardrails work that creates ROI.

- Edge Cases: Uncommon but critical scenarios (emergency, compliance).

- Guardrails: Rules that force safe behavior (transfer now, read disclaimer).

Want the printable checklist? Join the newsletter and get the 1-page 75/25 audit PDF.

Related Playbooks

Explore next:

AI for Lead Generation · AI for Cost Reduction · AI for Customer Retention